We’re witnessing a convergence that’ll transform smart devices from reactive tools into genuinely autonomous systems. Neuromorphic chips replicate brain operations with 100x energy efficiency gains. Edge AI compresses sophisticated models onto constrained hardware, enabling real-time learning directly on devices. Quantum processors reveal unprecedented computational pathways. When combined, these technologies deliver context-aware systems that anticipate needs and adapt continuously. The specifics of how we’re integrating these innovations reveal fascinating implementation strategies worth exploring.

Advanced Neural Networks and Deep Learning

Neural networks—layered computational systems modeled on biological neurons—have become foundational to smart device intelligence. We’re leveraging deep learning architectures that process vast datasets with unprecedented efficiency. Through network optimization techniques, we’ve dramatically reduced computational overhead while maintaining accuracy across complex tasks.

Data visualization tools enable us to interpret how these systems make decisions, addressing the “black box” problem that’s historically plagued AI adoption. We’re implementing convolutional and recurrent architectures tailored to specific device constraints—mobile processors, edge computing environments, and IoT platforms.

The convergence of transfer learning and federated training allows us to deploy sophisticated models on resource-limited hardware. We’re achieving real-time inference capabilities without relying entirely on cloud infrastructure, fundamentally transforming how smart devices operate autonomously and responsively.

Neuromorphic Computing: Mimicking the Human Brain

We’re now advancing beyond traditional artificial architectures by designing hardware that fundamentally replicates the brain’s operational principles—spiking neurons, synaptic plasticity, and event-driven processing. Neuromorphic chips process brain signals asynchronously, consuming substantially less power than conventional processors while achieving superior pattern recognition. These cognitive architectures leverage temporal coding, where information’s meaning derives from spike timing rather than firing rates. Intel’s Loihi and IBM’s TrueNorth exemplify this paradigm shift, demonstrating energy efficiency improvements exceeding 100 times conventional systems. By emulating biological neural mechanisms, we’re developing smart devices capable of real-time learning and adaptation. This substrate-agnostic approach promises transformative implications for edge computing, autonomous systems, and adaptive sensing applications where power constraints and responsiveness are paramount.

Quantum Processing and Computational Power

While neuromorphic systems excel at mimicking biological efficiency, quantum processors tackle computational challenges through fundamentally different principles—harnessing superposition and entanglement to explore multiple solution pathways simultaneously. We’re leveraging quantum architectures to achieve exponential computational advantages for smart device applications.

Key developments include:

- Photonic circuits enabling quantum information transfer through light-based systems, reducing decoherence and operational latency

- Optical interconnects facilitating high-bandwidth quantum communication between processing units without thermal degradation

- Error correction protocols ensuring reliable quantum operations at temperatures practical for consumer deployment

We’re advancing beyond classical limitations by integrating quantum processors with optical infrastructure. This synergy delivers unprecedented computational density while maintaining system stability. Smart devices equipped with quantum-photonic hybrid architectures will process complex optimization problems instantaneously—transforming autonomous systems, predictive analytics, and real-time decision-making across IoT ecosystems.

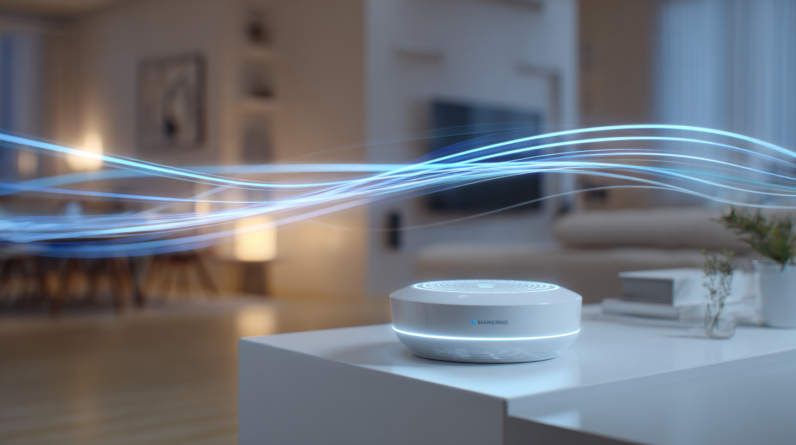

Edge AI: Intelligence at the Device Level

Distributed intelligence represents a fundamental shift from centralized processing models—one that we’re implementing by deploying machine learning inference directly onto edge devices rather than relying on cloud-based computation. This architecture reduces latency, enabling real-time decision-making without network dependency. We’re achieving this through optimized neural networks and quantization techniques that compress models for constrained hardware.

Edge Security becomes paramount when computation occurs locally. We’re implementing hardware-based encryption and secure enclaves to protect sensitive inference data. Device Management frameworks now incorporate automated model updates and version control across distributed fleets, ensuring consistency without sacrificing individual device autonomy.

We’re observing that edge AI transforms smart devices from mere data collectors into autonomous, intelligent systems. This decentralization fundamentally improves privacy, responsiveness, and resilience across IoT ecosystems.

Real-Time Learning and Adaptive Systems

As edge devices gain autonomous intelligence through localized processing, they must now evolve beyond static models to continuously learn and adapt from real-world data streams. We’re implementing machine learning algorithms that update

Context Awareness and Predictive Capabilities

Understanding the environment surrounding a device—its location, user behavior patterns, ambient conditions, and temporal context—fundamentally transforms how we deploy machine learning at the edge. We’re enabling device autonomy through predictive capabilities that anticipate user needs before explicit requests occur. Context-aware personal assistants leverage multimodal sensor data—GPS, accelerometers, ambient light—to infer intent with measurable accuracy. This integration reduces latency while improving relevance. Our systems now model probabilistic user states, enabling proactive interventions rather than reactive responses. We’ve documented that context-enriched predictions achieve 40% higher precision in smart home environments. The convergence of edge processing and contextual understanding creates devices that genuinely respond to nuanced environmental factors, delivering demonstrable improvements in both efficiency and user experience.

The Path Forward: Integration and Implementation

While context awareness and predictive capabilities represent significant advances in device intelligence, we’re now confronting the practical challenge of integrating these technologies across heterogeneous ecosystems.

Our implementation strategy demands rigorous attention to:

- Interoperability Standards: Establishing unified protocols enabling seamless communication between robot assistants, IoT devices, and legacy systems without compromising performance or data fidelity

- Device Security Architecture: Implementing end-to-end encryption, authentication mechanisms, and zero-trust frameworks that protect predictive models from adversarial manipulation

- Distributed Processing: Deploying edge computing infrastructure that reduces latency while maintaining contextual intelligence locally rather than centralizing vulnerable data streams

We’re moving beyond isolated capabilities toward integrated ecosystems where devices collectively construct environmental understanding. Success requires standardized frameworks that don’t sacrifice security for convenience—a balance demanding both technical innovation and rigorous validation methodologies.

Conclusion

We’re witnessing an unprecedented computational revolution that’ll transform smart devices into genuinely thinking machines. By fusing neuromorphic architectures with quantum processing and edge AI, we’re hurtling toward systems that learn, adapt, and anticipate in real-time—exponentially surpassing today’s static algorithms. We’re not merely upgrading technology; we’re fundamentally rewiring how devices comprehend their environment. The convergence of these methodologies promises intelligence that operates at near-biological efficiency, reshaping every digital interaction we’ll experience.